Ad agencies have been using artificial intelligence to optimize their campaigns for years.

But since generative AI went mainstream with the release of chatbots – namely, OpenAI’s ChatGPT – even seasoned users are rethinking what it means to harness AI responsibly. That means enhancing how humans work rather than replacing them, as well as ensuring AI doesn’t make the internet’s misinformation problem even worse.

As far as technological leaps go, AI has the potential to be as impactful as nuclear power, said Oleg Korenfeld, CTO at WPP’s CMI Media Group, during a panel discussion at AdExchanger’s Programmatic IO event in Las Vegas last week.

“If nuclear power wasn’t used to create a bomb out of the gate, we wouldn’t have an energy crisis,” Korenfeld said.

It’s up to technology and media companies to decide whether AI will be an atomic bomb that annihilates countless jobs or if it will power the workforce’s next leap in productivity.

Getting real about artificial intelligence

AI has (thankfully) replaced the metaverse as digital marketing’s obsession du jour.

In fact, the word “metaverse” was barely mentioned at Programmatic IO, remarked Hyun Lee-Miller, VP of media at independent agency Good Apple. Her observation was met with applause from the audience.

When it comes to technologies that can transform how consumers and brands interact, “AI is farther ahead on that than the metaverse ever was,” Lee-Miller added.

Still, there’s a lot of hype surrounding AI.

But Korenfeld pushed back against the idea that agencies are talking up AI solutions to stay relevant, a notion expressed during an earlier presentation.

“AI is just the natural next step in our job as media agencies to invest our clients’ dollars as effectively and efficiently as possible,” Korenfeld said.

The tech already plays a key role in campaign optimization because it can process user data at a greater scale and much faster than humans can. AI also powers the brand safety solutions agencies use to interpret content signals collected by bots and site crawlers, which improves media quality assessments.

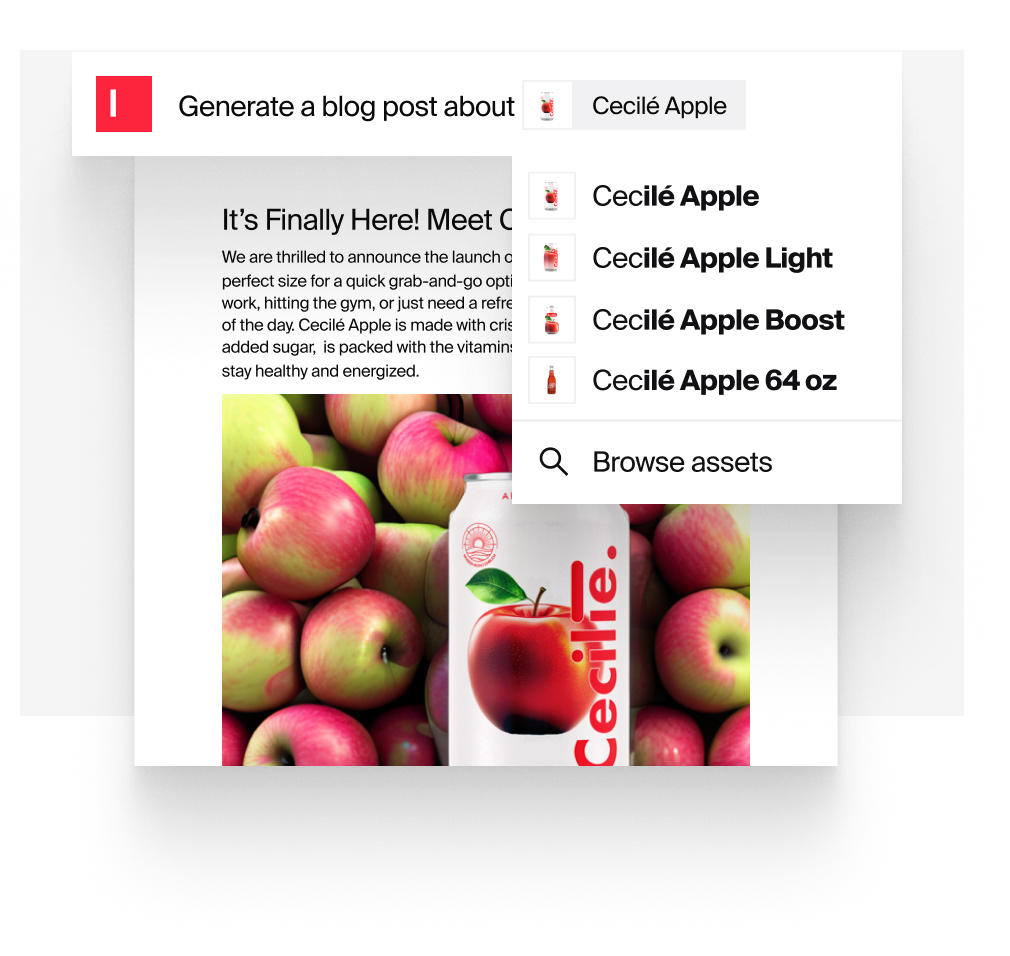

Automating lower-order ad ops tasks, like campaign fulfilment and tech stack management, is one of the most common applications for AI tech today, Korenfeld said.

Marketers are also using AI to help with media planning and to inform midflight optimization, which can have a marked impact on campaign efficiency. By applying AI-based targeting algorithms, for instance, Good Apple has been able to lower the cost per action for some campaigns by up to 80%.

And ad agencies are using generative AI to make their employees more productive across a variety of tasks, Lee-Miller said, including developing content, writing emails, aggregating news coverage and creating slide decks for presentations.

The main task facing all industries is ensuring AI remains a tool that enhances the work humans do, rather than replace it.

“This is not about taking away jobs,” Korenfeld said. “AI is creating new opportunities and future-proofing existing jobs.”

Defusing the bomb

But there is also a lot of magical thinking surrounding AI and its capabilities.

“The term ‘AI’ is actually kind of silly,” Korenfeld said. “The intelligence is not artificial. It’s actually human intelligence that allows us to take the data sets and apply them in effective ways.”

AI chatbots, as they exist now, simply pull their responses from content that was previously generated by humans. Put more cynically, “it’s all based on garbage search results,” Korenfeld said.

Because generative AI solutions are still unproven in how they manage and protect the information that trains them, Lee-Miller said, agencies need to be conservative about feeding them sensitive data.

“Don’t put confidential client information into ChatGPT,” she said. “Don’t input any agency proprietary data into the chat function – because we really have no idea where that information is going to go.”

Marketers should also be careful that the data sets they use to train AI models are unbiased and representative of the entire population, and companies that use AI must ensure that these solutions protect consumer privacy and provide an opt-out for data sharing. Not to mention the need to consider the intellectual property rights of artists and content creators.

Not only are regulators, including the FTC, watching, there’s a moral imperative for businesses to consider the ethics of AI.

AI stakeholders have a responsibility to stop generative AI from being used to propagate misinformation and negative content, Korenfeld said.

“We’ve dealt with human-based troll farms – now imagine that they are powered by machine learning,” he said. “The amount of misinformation content they can produce will be overwhelming.”

By

By